CPU-only LLM Inference

In this article, we’ll be putting our second-hand AMD Threadripper 1950x to some inference tests 🔥 - can you already smell some overheated plastic? No? That’s because the be quiet! is at the heart of our QuietBee 🐝😎

Quick intro - Niche AI trajectory

Alright, let’s take a step back - we’ve built the base for our AI home-lab (called QuietBee) - but as long as the GPU’s aren’t here yet - QuietBee just sits there without any work 😟. However, while doing some Nichebench testing - we had to use some local models - and we did run some random non-optimized CPU inference on the QuietBee - and it was quite okay (yielding 5-10 tokens, depending on the model).

But wait. Why are we doing this at all, you’re asking? I got you → we’re on our path to build open-weight Niche AI models - fine-tuned flavors that can perform exceptionally well, in niche domains, think: Drupal, Wordpress, Laravel, etc. We started the series with this super tiny fine-tuning experiment, and we’re still experimenting.

Alright, now that you’re up to speed - let’s dive into the article. Specs for the rest of the article: we’re running AMD Threadripper 🧵 1950x with 94 GB of RAM (I need 32 GB more to make it full quad-channel). I also capped CPU at 3.6 Ghz - this is important, I’m NOT running full-speed, reasons is that my PSU can’t take it - it’s pending to be changed next month 🤫

So, our results are RELATIVE, also the article is less about the results and more about the knobs, compilations and approach.

Targets: 🧠 What you’re actually optimizing for

We’ll be using for most of the things llama.cpp and a CPU-specific fork.

When you build llama.cpp, you get → llama-bench executable. You can use it to run benchmarks.

There are 3 metrics we can use:

- Prefill (pp) - how fast the model processes your prompt (input). Matters for RAG, retrieval, few-shot, and long-context summarization. In our case - we care, as usually in programming we do give a LOT of context.

- Decode (tg) - how fast the model generates (output). Matters for chatbots, code completion, streaming.

- Mixed (pp+tg) - a combined test that’s closer to real-world workloads (both long prompts and continuous generation).

When turning various knobs on CPU - you will optimize for one of these metrics, it’s rare when you can bump all 3 at the same time.

Here’s a sample output:

Also:

- Different models behave differently - in all my tests we used the same model: openai/gpt-oss-20B @ Q4

- Different quantizations also behave differently - to dive deep into the rabbit-hole see this discussion.

- Apparently allocating what CPU cores / memory your runs use - also can increase fidelity / stable generation speeds.

Process & knobs: 🔧 What we tuned and how

🛠️ Build variants:

These are various build-types - different sources (i.e. original vs fork) - and different build flags, before running the compilation:

- Vanilla - a simple llama.cpp build - default CPU flags - reference here;

- BLAS / BLIS - enabled optimized linear algebra - same reference as above - sometimes these help in token generation (output), but by a little;

- IK native - ik_llama.cpp - built with flag

march=native(could not enable FANCY SIMD still) - this is a fork of llama.cpp with special optimized knobs here and there. - … various other combinations and flags you can try out.

🎛️ Runtime knobs

- Threads – scaling beyond 16 cores didn’t help decode, 14-16 was best.

- NUMA – this controls which CPU / Memory slots are used.

- uBatch and Batch – playing with this knob can increase performance by a little.

- KV cache –

q8_0/f16was the biggest decode (output) win (but not in ik_llama though) - Flash Attention – modest prefill boost (~3–5%), no decode gain (there are also flavors)

The process overall looks like this - step-by-step of sorts:

- You clone the llama.cpp (or ik_llama.cpp);

- You read the docs on how to run a BUILD - get all the dependencies installed;

- You run the build → get your

build/bin/llama-bench - You can now run the bench with various parameters / knobs.

After experimenting A LOT, we realized that for this to be done reliably, due to small variances here and there, it’s better to create a wrapper shell command and run a bulk batch, and then compare the runs.

The normal 1 run output looks something like:

Here you get the 512 tokens prefill (input processing), 128 tokens decoding (output generation) and a mixture of 256 prefill + 512 generation.

By going back and forward, we ended-up having a huge table of these runs, and it got very hard to compare stuff. So, we decided to code (with some help from Claude) a llama-bench wrapper - https://github.com/HumanFace-Tech/hft-cpu-test/ - this tool 🛠️ allows you to:

- Run basic computer/setup checks ✅

- Define a config.yml for 🔭 exploratory (superficial) setups you want to explore…

- Run these in one batch and get a final comparison output 📊

- Use the output from exploratory run → to create a more in-depth run (check more knobs) to find the ultimate configs for best runs 🎯

Looks something like:

We use it ourselves (and planning still to use it, once I get a proper PSU to handle an extra CPU EPS connection, and enable XMP on my RAM) - hope it will be useful for someone else where 🌎 - if you’re that someone, I’d appreciate a ⭐ on that repo 😅.

Results: 📈 What actually happened

Let’s talk about my exploratory → deep tests.

🔭 Exploratory:

We made 6 different builds:

- llama.cpp (vanilla) - simple default llama.cpp CPU build

- llama.cpp + BLAS

- llama.cpp + BLIS

- ik_llama.cpp - the specialized fork - vanilla

- ik_llama.cpp + BLIS

- ik_llama.cpp + additional flags - that I hoped would enable the FANCY SIMD, but they didn’t, and I also don’t know whether those flags had any influence on the performance of the build

Then we just targeted 2 different modes (after experimentation):

- with numactl enabled - and force everything to run on first 16 logic cores (having 1 process per 1 physical core) - aka all_cores_16t_01

- with numactl disabled - basically have it dynamic (but balance is still off - however it allows to run random 1st available cores) - aka no_node_16t

Results are:

.png)

Raws:

Alright, here’s what we learned from this:

- Our custom ik_llama builds (i.e. ik_vanilla) - performs very well - on average 60-90% higher than normal vanilla builds.

- NUMA-aware execution boosts throughput - and at times, by a lot.

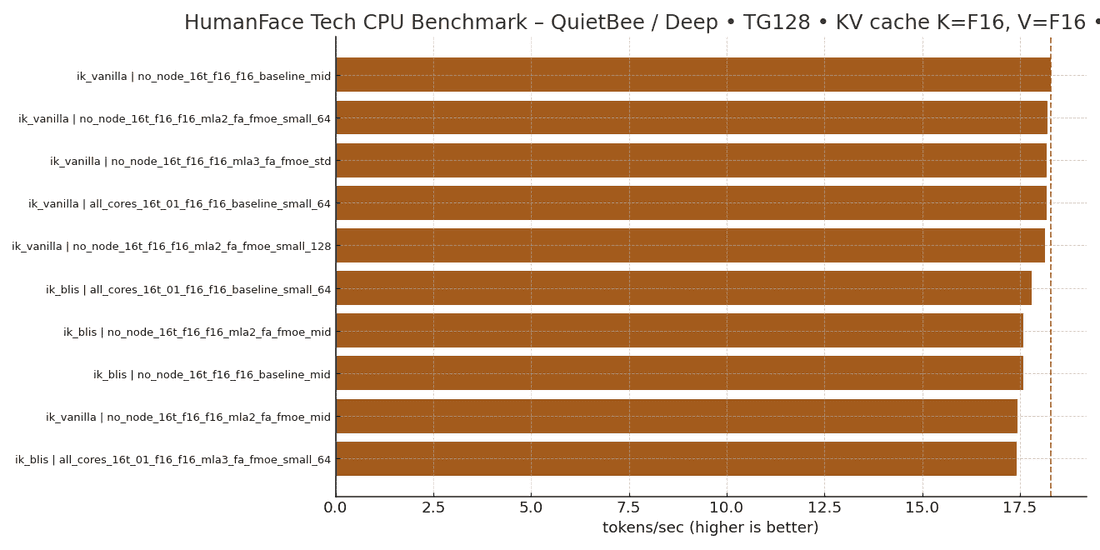

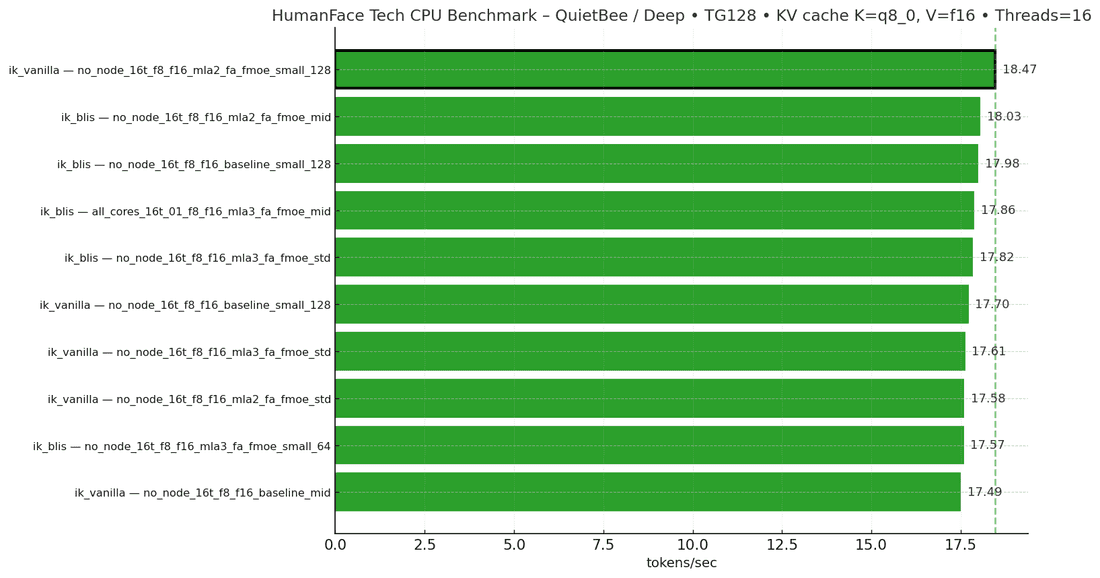

- TG128 (generation/decoding) - tells a bit a different story, so if you want that number higher no matter what, you might want to be interested in BLIS, but to be fair: ik_vanilla isn’t far behind (the variance might be the one that sets it apart).

Okay okay, let’s dive into a deeper analysis now that we know what we wanna focus on. The report btw generated a promoted.yml config - that we used as our basis - we did adjust it slightly:

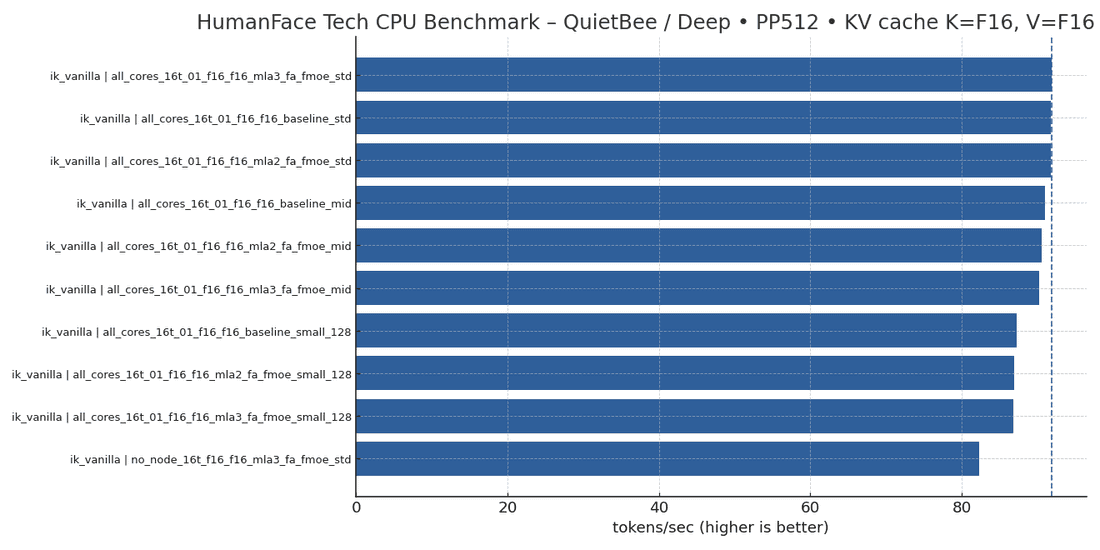

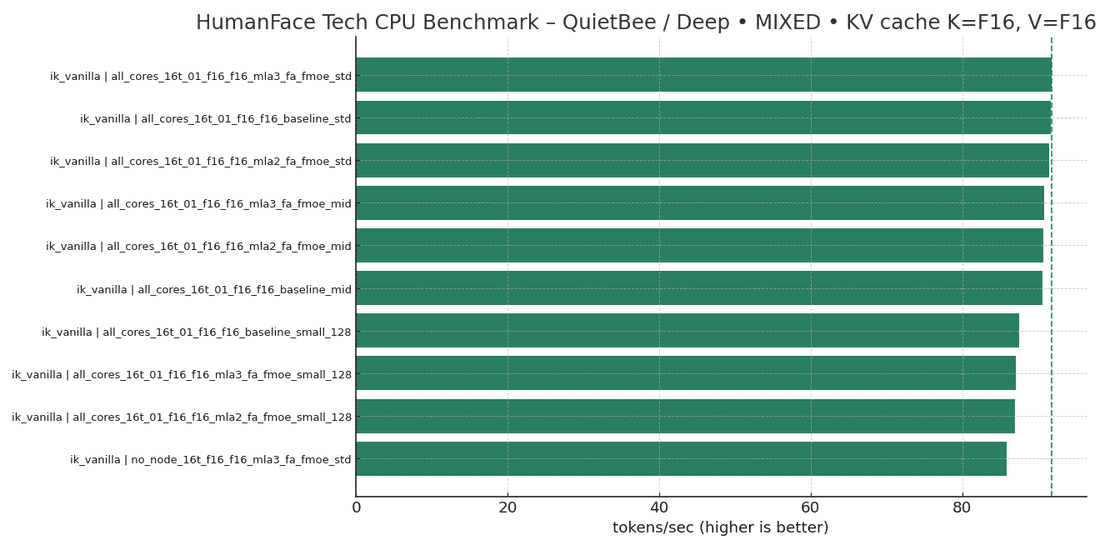

I also ran this as 2 separate runs for different KV params - initially only f16/f16 (classic), and later the q8_0 / f16:

Great - so what about quantizing the K in KV: q8_0 / f16? It does make a model slightly worse (≤1%) - so keep that in mind:

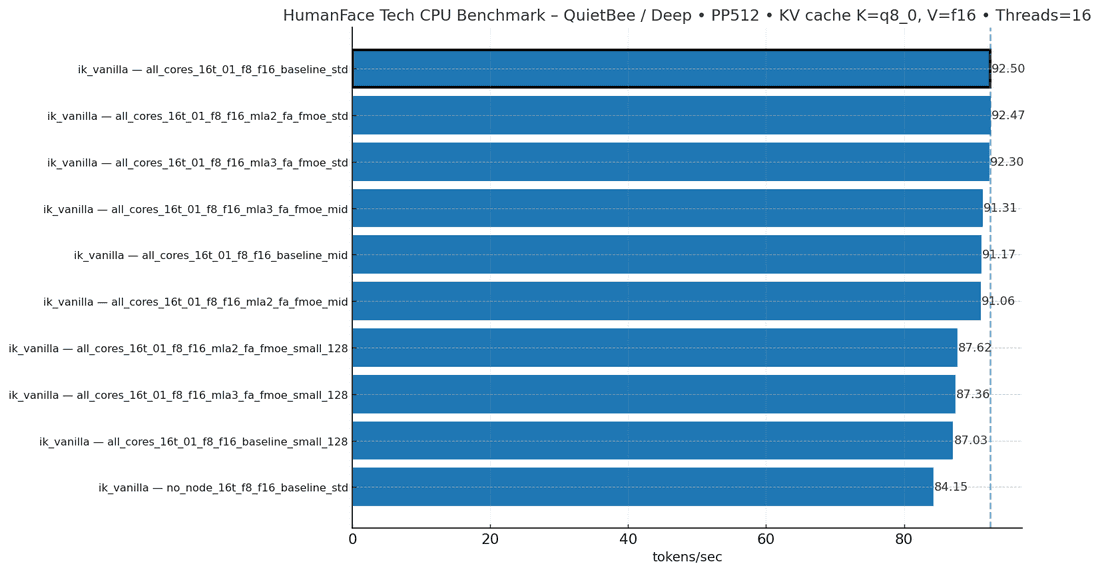

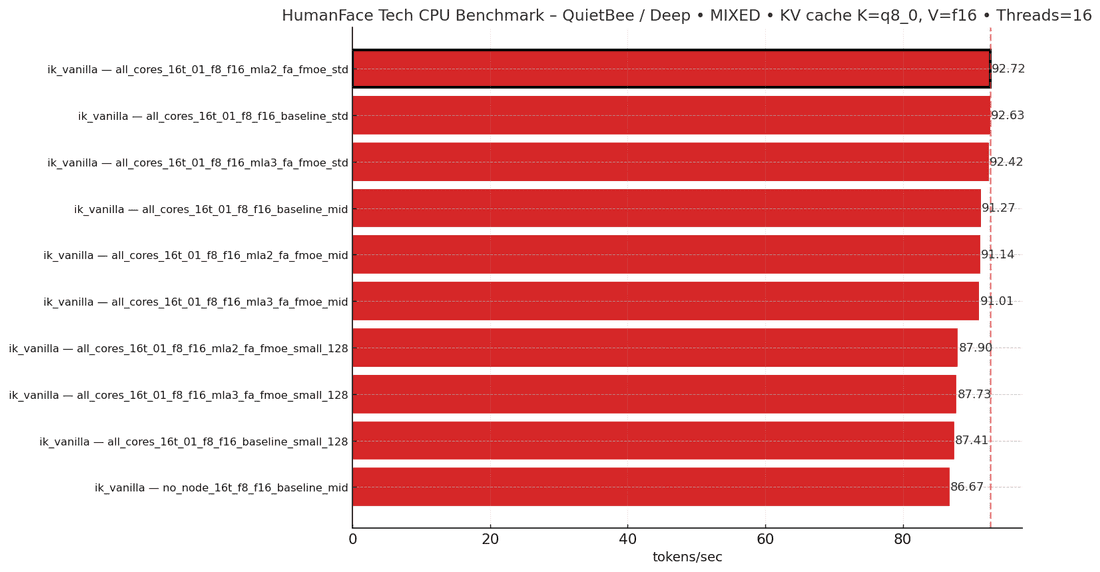

Or visually 📊:

So, we can conclude:

- K-quantization gives a small but consistent speed bump.

- MIXED best improved from ~91.7 t/s (f16/f16) to ~92.7 t/s (q8_0/f16), roughly +1%.

- PP512 (prefill/input) moved from ~91.8 to ~92.5 t/s (+0.7%). TG128 best nudged up from ~18.3 to ~18.5 t/s.

- The “all_cores_16t_01 + ik_vanilla” family still dominates PP512 and MIXED. Whether baseline or with MLA/FA/FMoE toggled, those variants occupy the top slots. The feature flags don’t change ranking much → gains are within ~0–1%.

- TG128 (generation/decoding) remains more sensitive to pinning and small-batch configs. Top TG128 entries skew toward no_node_16t and “small_128/64” variants (both ik_vanilla and ik_blis show up), suggesting generation kernels benefit from tight per-token work and sometimes less aggressive NUMA/pinning.

🥇 Our “everyday” preset (and why)

After running multiple rounds of deep CPU benchmarking - hundreds of iterations with different kernel builds, memory pinning strategies, attention implementations, KV cache types, and batching configurations → we can now confidently say we’ve found the sweet spot. This is the setup that delivers top-tier performance across all workload types without sacrificing model quality.

- Build:

ik_vanilla(the ik_llama.cpp - fork, with default settings) - Threads: 16 (pinned across NUMA nodes, e.g.

numactl --physcpubind=0-15) - KV Cache: default (which is f16/f16)

- Flags:

-fa -fmoe -mla 2(fast attention, fused MoE, modern linear algebra optimizations) - Batching: standard

- Execution profile: NUMA balanced, threads pinned, page locality enforced

This single configuration emerged as the most consistent and balanced across all three benchmark dimensions we care about:

- PP512 (prompt processing):

- 🚀 ~91.7 t/s - within 0.2 % of the absolute best run.

- 📈 +61–62 % faster than vanilla (which averages ~56.8 t/s).

- TG128 (generation):

- ⚡ ~17.0 t/s - only ~3 % slower than the most extreme TG-optimized build.

- 📈 ~12–14 % faster than vanilla (~15.0–15.2 t/s).

- MIXED:

- 🔥 ~91.6–91.8 t/s - best overall throughput when simulating realistic multi-stage workloads.

- 📈 ~61 % faster than vanilla (~56.8 t/s).

🧭 Closing thoughts

I think, it was a pretty good ride we took - we can now restrict some params, swap the model and check again. Or, we can install new PSU or 1 more RAM stick, and try again. I think general rules should translate (and stick to the CPU+RAM setup) - but the result of the ride - is the repo → wrapper around llama-bench that can (in few hours) give us a nice & solid comparison table - is 🔥

If you’re curious - feel free to explore and use the tool - and test things yourself - I’d love to hear what results you encountered, leave a comment 👇.

Also please consider supporting our effort on - Ko-Fi - any donation will go straight into the GPU budget and more articles → https://ko-fi.com/nikrosergiu

And, if you want to get in-touch for some projects - check out HumanFace Tech and book a meeting.

💡 Inspired by this article?

If you found this article helpful and want to discuss your specific needs, I'd love to help! Whether you need personal guidance or are looking for professional services for your business, I'm here to assist.

Comments:

Feel free to ask any question / or share any suggestion!