Local & Privacy Friendly AI in IDE

Hey hey folks! I’ve been quiet for a while - as I was in the process of switching jobs and onboarding a new company - Dropsolid. Now I’m back with some new experiments and ideas - let’s dive into this one 👇

I noticed that many (or, some) IT companies don’t fully trust to share their code-base with some of the big players like Microsoft / Github or OpenAI - and they’re right to do so:

- many developers aren’t aware that all the opened files are automatically sent to these companies as a context, including such files as .env or .sql - with all the secrets that they have;

- even experienced developers might accidentally open these local files and they will be sent away;

- this also plays badly with data compliance and IT security, especially when IP (intellectual property) should not leave the country borders, or company-door.

Ideally - the company will have to decide on a few players that it can trust, or they can host and deploy a private commercial LLM and allow employees to use it (think: Azure, AWS Bedrock or GCP's - Vertex AI) - but this might take a while.

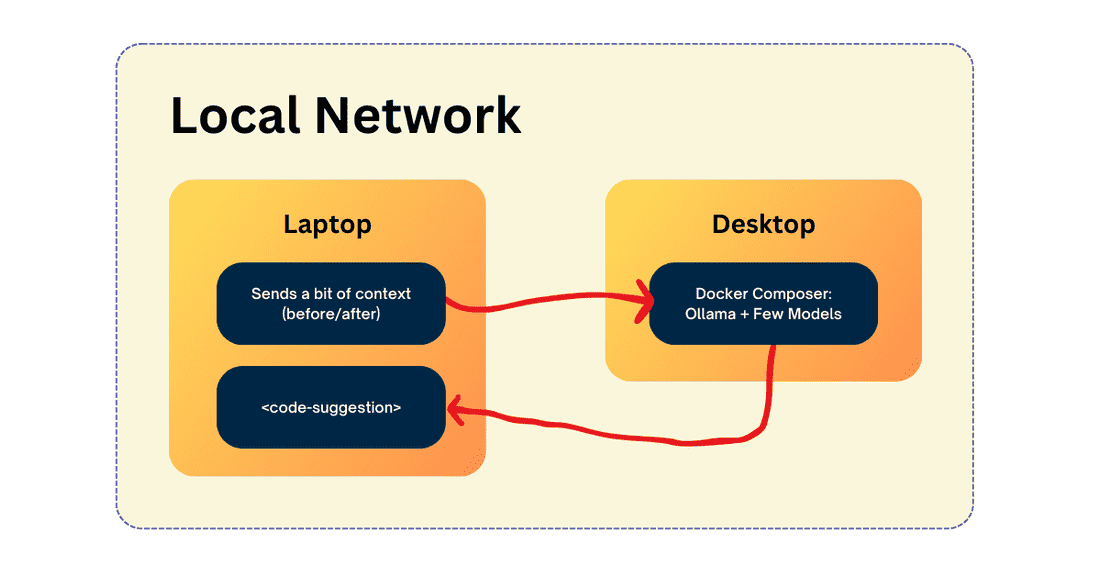

In the meantime - you could safely deploy a local LLM to help with smaller & simpler AI-tasks like auto-completing a few lines in your IDE or helping out with some simple boilerplate. A local LLM will keep things private (either on your device or on your network) and won’t share any code-base with any 3rd party company. Setting these up, of course, will depend on your individual case - in my case, I have a desktop with a gaming GPU - so I’ve decided to deploy a LLM on it, and access it via network (on my work-laptop).

If you don’t have Apple Silicon or a dedicated GPU, you could use a smaller model and run it off your CPU. I'm not sure how accurate they are, but for simple 1 line-completions, they should be fine.

How to get the setup running?

- Set-up Ollama (either locally, or via network - the option that I chose);

- Models - decide which models you’ll use, pull those;

- VSCode Plugin or Intellij (PHPStorm) extension - decide on what plugins you’re going to use;

- Use it.

Note: I'll demo both cases for VSCode and PHPStorm. I did start writing this article in November 2023 (VSCode-part) so there might be better options by the time you're reading it.

Ollama - the whys and the setup.

There are many different ways to run an LLM locally - I like and tried 2 of those: llama.cpp & ollama.

For my particular case, I picked Ollama - because it allows us to run multiple models at the same time (a model per request), is built on top of llama.cpp and is a bit more hassle-free.

I like to keep my host machine clean, so for all of the dependency-heavy things, I use docker & docker-compose. Here’s a simple docker-compose example:

And to have all the things nicely together - I also have a simple nginx config, to expose on my local network the tokenizer.json files, per each model (the vscode plugin I’m using, has the need for these, but you can skip this step, if you want):

File -> /nginx_config/default.conf

server {

listen 80;

server_name localhost;

location / {

root /usr/share/nginx/html;

index index.html index.htm;

try_files $uri $uri/ =404;

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root /usr/share/nginx/html;

}

}Once you create this docker-compose.yml file - you can bring it up by running docker compose up -d (this will bring up everything).

Once that is done, you can now do docker compose exec ollama bash

and being inside the container you can run: ollama pull codellama:7b-code - this will pull the codellama:7b-code - a llama-based coding model (hint: you can pick any model from: https://ollama.com/library). To run the model in the CLI, you can type: ollama run <model> and you can now chat with the model directly.

If running the model via CLI worked out fine, we can proceed with the next step. NOTE: there are other better models out there, i.e. qwen2.5-coder but it might be a bit trickier to make them work in IDEs as they are pretty new and plugins lack behind a bit.

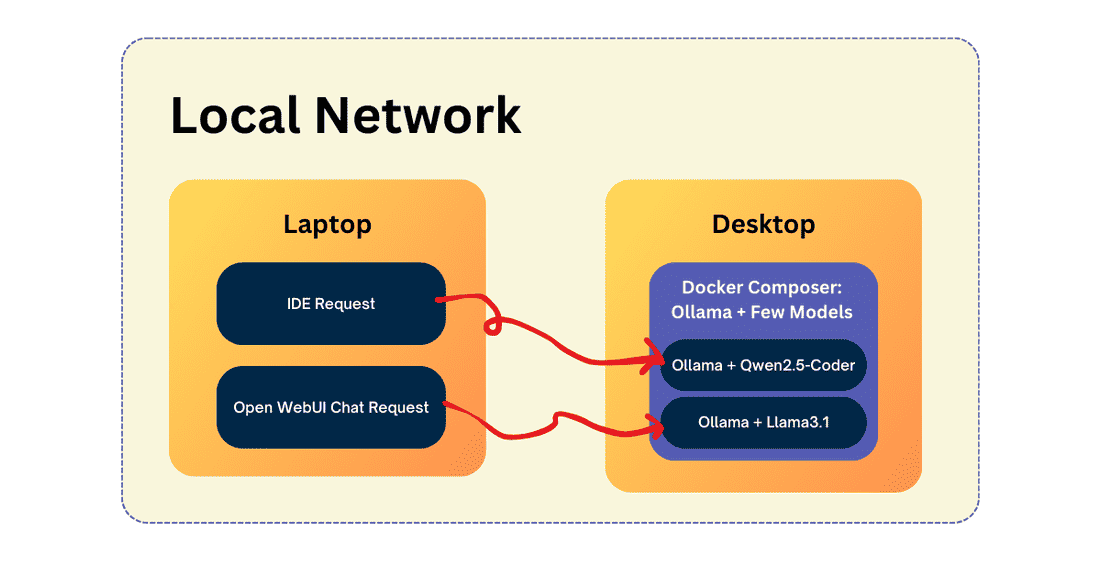

Sidenote: I also added this library - open-webui - so that I (and my wife) can use these models outside of the IDE, for simple tasks. There’s a ton of UI libraries you can use - for me this worked out well and I think for a small business or family-setup, it will work well as well.

Here’s the full adjusted docker-compose.yml:

Having this setup, I now have:

- http://localhost:3000 (or my local IP address) - for the simple web UI around any model and;

- http://localhost:11434 - as the service for my IDE’s autocompletion.

Let’s move to the VSCode part.

VSCode - what plugins are there

VSCode is nothing without its plugins (at least to me). I browsed through the sea of the plugins to find those that would allow for proper Github Copilot autocomplete replacement, and there's a ton of them.

I’m sure I didn’t find or check all the plugins, but, at least for now, I settled on: llm-vscode - mainly the reason I’m using this one is:

- It has some traction (~60k installs)

- It comes from the HuggingFace - so it carries a bit more weight

- It allows the use of Ollama via the restful API

- It’s fairly simple (although has some extra bells and whistles that I can skip)

Here’s the sample of my settings in VSCode:

In short:

- I’ve disabled the attributionEndpoint - because I don’t want any code to leave my laptop (although it would only be triggered on-demand).

- I’ve adjusted the URL to point to my desktop (with the GPU, running docker-compose with Ollama);

- And some default Fill-in-the-middle settings, specific to codellama.

Now, I can get sweet auto-completions over my network (use specific local IP instead of localhost):

JetBrains / PHPStorm - what plugin can I use?

I’ve found the perfect plugin to use for PHPStorm - it does exactly what’s needed: https://www.codegpt.ee/

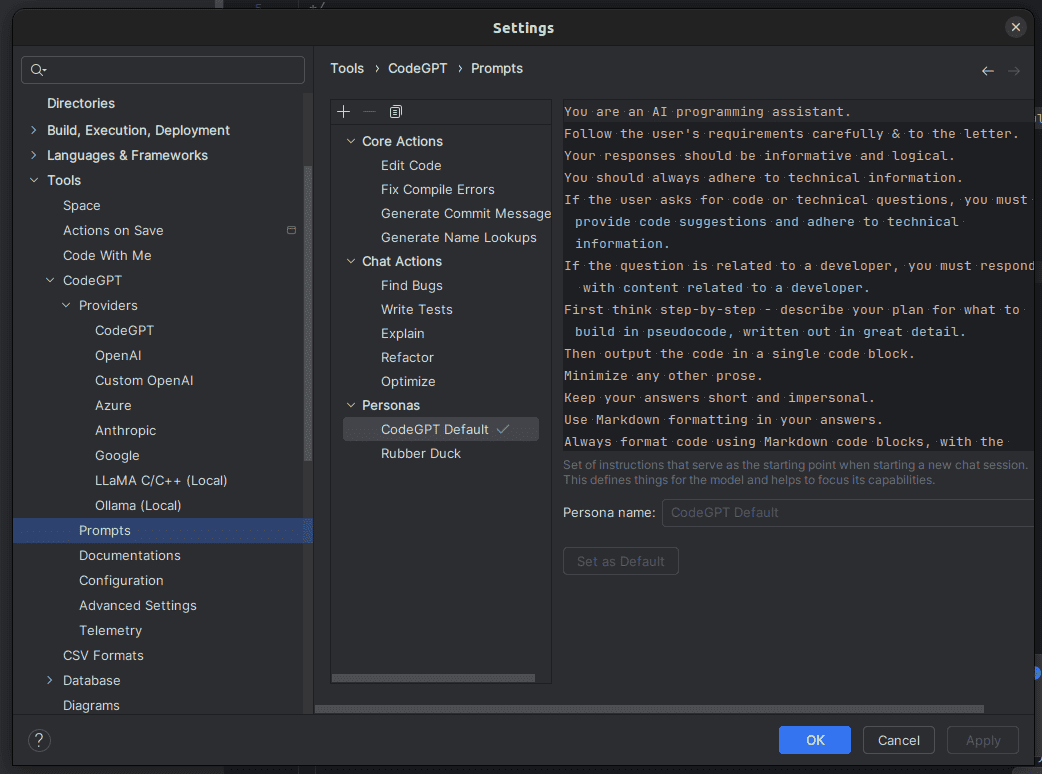

I like that it’s simple, the author is solid, and it works for free with a self-hosted model. There’s no hidden (as far as I looked) telemetry - there IS one, for plugin usage, but it’s opted out (and optional opt-in).

Here’s the source: https://github.com/carlrobertoh/CodeGPT

Here’s the plugin for PHPStorm: https://plugins.jetbrains.com/plugin/21056-codegpt

What I like is that you can have your own actions / prompts / personas, and even Documentation pages - this is really helpful to force the AI to stick to a given framework or version of a given framework - in example, Drupal 7.

You can also paste-in best-practices for given actions, which would guide AI for specific code-suggestions or scaffolding.

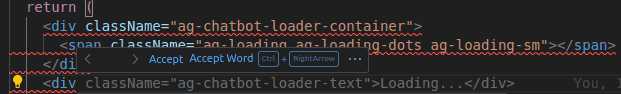

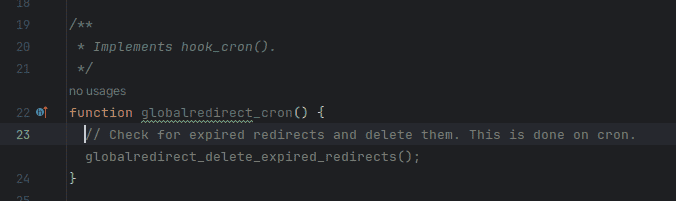

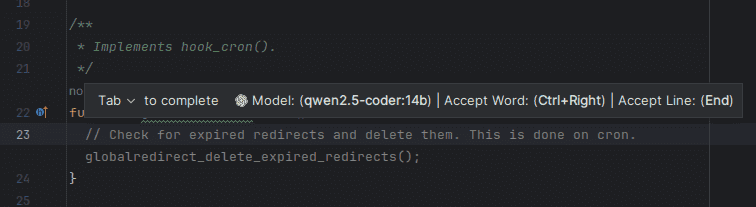

It creates ghost-texts like copilot, and you're having few options, using whole snippet, by word, or by line:

You could also use a reverse proxy, and assign a sub-domain. This will allow you to use your AI Ollama not just from your local-network, but from anywhere, really. Just keep in mind that you'd have to secure it 🔒:

- for CodeGPT - you would have to use a Bearer secret (in your nginx / apache settings)

- and for llm-vscode - you can define htpass (and URL would be: https://user:pass@sub.domain.com/api/generate)

Questions / Alternatives?

I’m sure there are more ways to accomplish this - and some probably way better, I also probably won’t stick to my llm-vscode plugin, because, after checking other plugins, I realize that some developers have great ideas. I’d love to have simple agents, shortcuts for invoking them, indexing my code-base into a local vector-store, allowing my agents to collaborate if needed, etc. But for now, it will do.

Do you have another setup you’re using? Did you find your perfect workflow? I’d love to hear about that, please leave a comment below! 🙂

💡 Inspired by this article?

If you found this article helpful and want to discuss your specific needs, I'd love to help! Whether you need personal guidance or are looking for professional services for your business, I'm here to assist.

Comments:

Feel free to ask any question / or share any suggestion!