Nichebench + New models

See the original article on Nichebench and how we spent some credits and figured out how well do the models perform in the Drupal field.

New models

In the last 2-3 months more models appeared on the radar, and some were very very interesting:

- Kimi K2 Thinking (latest-latest) - 1T Params (32B active)

- Kimi K2 Instruct 0905 - 1T Params (32B active)

- Kimi Linear - 48B (3B active)

- GLM 4.6 - 355B (32B active)

- MiniMax M2 - 230B (10B active)

- IBM Granite 4 Small - 32B (9B active)

- Nemotron Super - 49B - dense

- Nemotron Ultra - 253B - dense (?)

Some of these models showed really good results in various benchmarks 👇

SWE-bench Verified (Software Engineering):

- Kimi K2 Thinking: 71.3% (claimed)

- MiniMax M2: 69.4% (claimed)

- GLM-4.6: 68.20%

- Kimi K2 Instruct: 65.8%

- GPT-OSS-120B: 62.4% - for reference

Terminal-Bench (CLI/Terminal tasks):

- Kimi K2 Thinking (with sim tools): 47.1%

- MiniMax M2: 46.3%

- Kimi K2 Instruct: 44.5%

- GLM-4.6: 40.5%

HLE (humanity’s last exam):

- Kimi K2 Thinking - 23.9 (claimed), 22.3% (reported), 44.9% (claimed, with tools)

- GPT-OSS-120B - 18.5% (reported)

- GLM 4.6 - 17.2% (claimed), 13.3% (reported), 30.4% (claimed, with tools)

- MiniMax M2 - 12.5% (reported), 31.8% (claimed with tools)

- Granite 4 H Small - 3.7% (reported) 😟

Arch & Sizes Comparison:

| Model | Size / Active | Experts | Active Per Token | Routing Type |

|---|---|---|---|---|

| Kimi K2 (both) | 1T / A32B | 384 | 8 + 1 shared | Shared expert + routed |

| GLM4.6 | 366 / A32B | ~1000+ | ~8+ (top experts) | Likely deterministic |

| MiniMax M2 | 230B / A10B | ~500+ | ~8 | Proprietary routing |

| GPT-OSS-120B | 117B / A5B | 128 | 4 (top-4) | Deterministic routing |

| Kimi Linear 48B | 48B / A3B | 256 | 8 | Deterministic routing |

| Granite 4.0 | 32B / A9B | 72 | 8 (top-8) | Deterministic routing |

| Qwen3-Coder | 30B / A3B | 128 | 8 (top-8) | Deterministic routing |

| GPT-OSS-20B | 21B / A3.6B | 32 | 4 (top-4) | Deterministic routing |

The bench-results are a bit odd 🤔 - self-reported values are usually higher, companies either employ tools, or agentic workflows, or multiple tries to get higher scores (i.e. Best@K). Overall here’s my take on them:

- Granit 4 H Small 32B - is a bit of an outlier, very little information online, almost no inference providers offer it, but it’s also intriguing.

- Kimi Linear 48B - also, very little benchmark info, probably because it’s small and generally unimpressive.

- Kimi K2’s - I’ll automatically discard because they are 1T params.

- GLM 4.6 and MiniMax M2 - are interesting, I kinda am leaning towards MiniMax M2, mostly because of their lower parameters count (but still 2X GPT-OSS-120B while not offering considerable boost).

- Nvidia Models are interesting a bit, but again, not a lot of information online.

—

🗨️ But, what do people say? Had AI run some sentiment analysis:

GLM-4.6

- Pros: Excellent at real-world coding, multi-step reasoning, and tool-using workflows, often rated near Claude Sonnet level for practical software engineering.

- Cons: Still trails Claude on headline benchmarks, some complaints about sluggishness in real setups, and seen as “great but not quite frontier closed-source tier.”

MiniMax M2

- Pros: Strong at task breakdown, planning, iterative diff-based coding and front-end work; highly praised for practical, runnable engineering loops and cost-effectiveness.

- Cons: Tool-calling reliability lags top models, continuous reasoning adds overhead, and some users feel it’s over-tuned to benchmarks rather than robust general reasoning.

Kimi Linear 48B A3B

- Pros: Great for long-context coding and analytical tasks, surprisingly strong given tiny active params and low training tokens, seen as a very promising efficient coder / reasoner.

- Cons: Benchmarks still behind top open coders, real-world feedback is thin, and community feels blocked by missing tooling and ecosystem support.

Qwen3-Coder-30B A3B

- Pros: Good at straightforward coding and powering local agentic workflows, with solid tool-use behavior and strong feedback for day-to-day coding tasks.

- Cons: Weaker on complex multi-step reasoning, often outperformed by its own general instruct sibling in conversational coding, and seen as somewhat overfit to tool schemas.

Granite 4.0 H Small 32B

- Pros: Very good at RAG, document understanding, and summarization with strong function-calling behavior in enterprise-style text workflows.

- Cons: Widely panned for coding; struggles with even simple programming tasks, hallucinates test results, and is explicitly recommended against for software engineering

Here’s how AI rates these in diff. categories (via Perplexity with Claude 4.5 Sonnet):

| Model | Coding Quality | Agentic / Tools | Local / DIY Readiness | Cost / Value | Community Tagline |

|---|---|---|---|---|---|

| GLM-4.6 | 9/10 | 9/10 | 2/10 | 6/10 | Best open coder, infra-annoying |

| MiniMax M2 | 7/10 | 9/10 | 3/10 | 9/10 | Cheap, pragmatic engineering workhorse |

| Kimi Linear 48B | 6/10 | 7/10 | 8/10 | 8/10 | Efficient long-context hopeful, tooling-gated |

| Qwen3-Coder-30B | 6/10 | 5/10 | 9/10 | 8/10 | Solid local agent coder, struggles with depth |

| Granite 4.0 Small | 2/10 | 4/10 | 9/10 | 8/10 | Great RAG engine, bad programmer |

What I see from these is: Granit 4 H Small remains a little outlier. MiniMax M2 - is very interesting. But they don’t depart far from gpt-oss-120b for example.

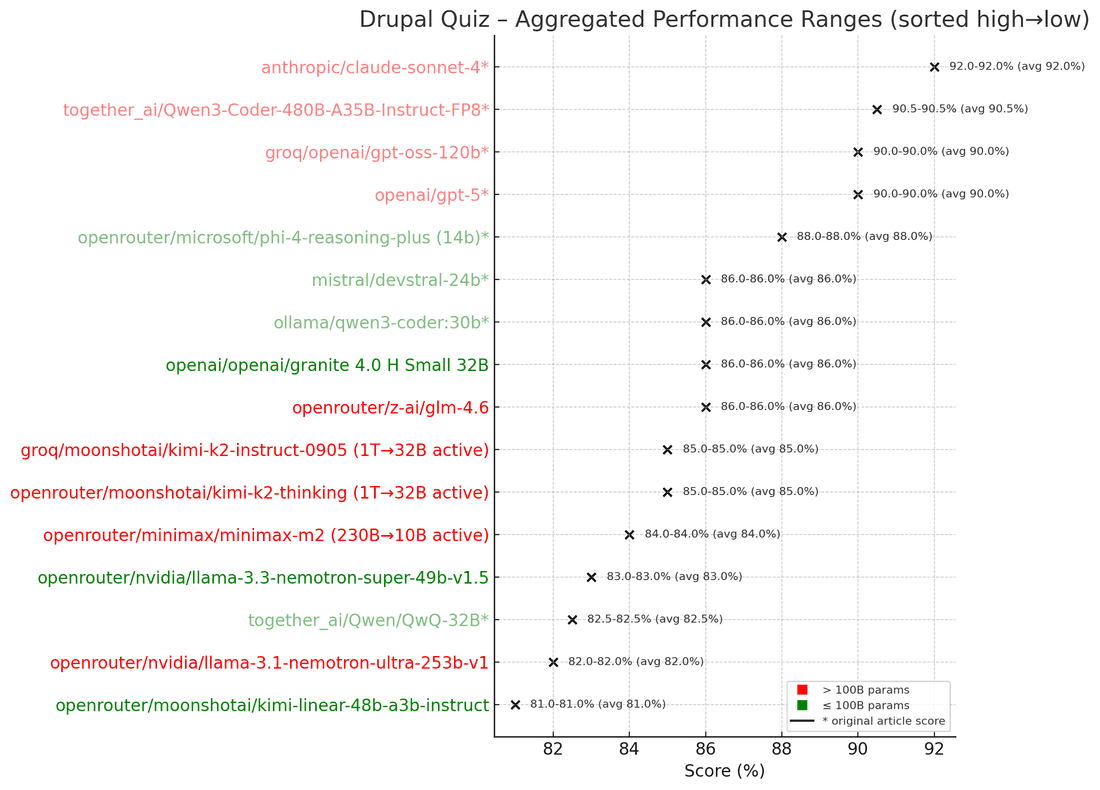

Results on our Nichebench - Drupal

Here are raw results from 1 single run on the Nichebench for Drupal Quiz:

-

Granite 4.0 Small 32B @ Q4 local run – 86%

-

GLM-4.6 – 86%

-

Kimi K2 Instruct 0905 (1T→32B active) – 85%

-

Kimi K2 Thinking (1T→32B active) – 85%

-

MiniMax M2 (230B→10B active) – 84%

-

Nemotron Super 49B – 83%

-

Nemotron Ultra 253B – 82%

-

Kimi Linear 48B A3B – 81%

As you can see, IBM’s Granite 4 Small, being indeed a small model, scored very well. But in the original article - we also had a quiz-based outlier - Phi4 Reasoning (which was 14B params). Even Devstral at 24B did a bit better or similar - at 86-88%.

MiniMax M2 and GLM did okay, Kimi Linear did worse.

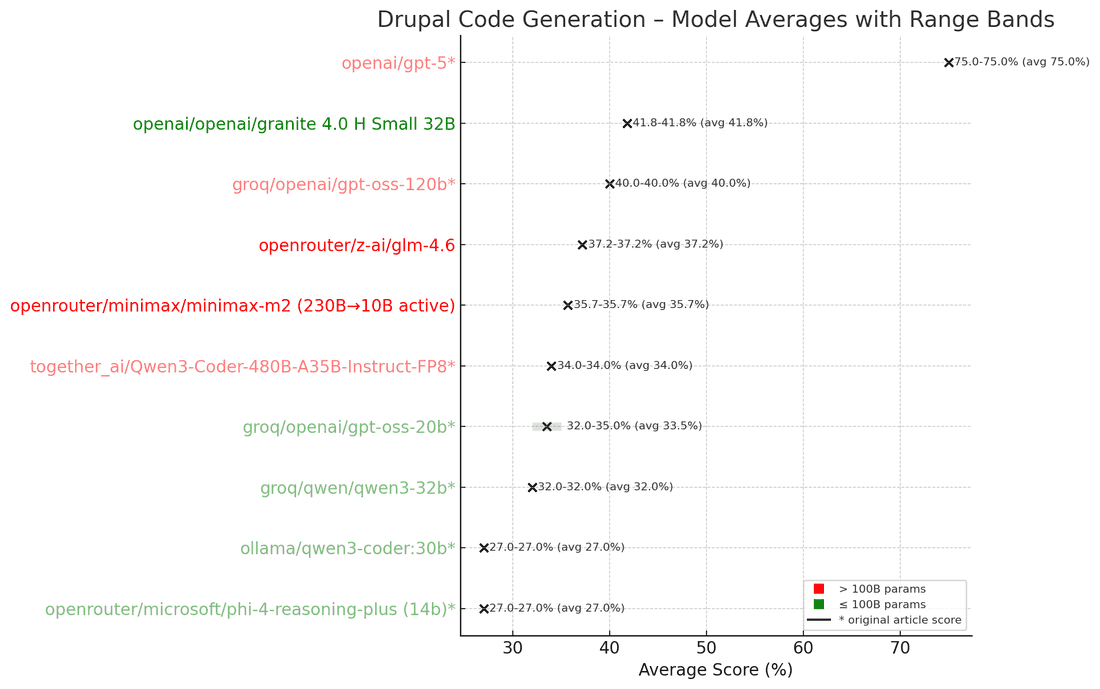

Let’s move onto code-generation 🧑💻

This was very surprising 😮 - somehow the model that got a bad-rep in the coding field, scored pretty high, even higher than GPT-OSS-120B, and higher than GLM4.6 and MiniMax M2. Somehow Granit 4 Small still makes waves. We ran it locally, via a GPU + CPU mix, that yielded an OK speed (>20 t/s generation). We used Q4_K_M flavor and initial test was done using temperature 0.6 and top_k 0.95 scoring ~41.8%.

We’ve reran the test again, to double-check if it indeed scores high and 2nd time also lowered temp to 0.1 and top_k increased to 1.0. Now it scored: only ~18%. Hm, was it a glitch that it scored high initially? 🤔 We’ve reran just some responses that scored 1.0 (judged by GPT-5) - through Claude Haiku / Sonnet / Deepseek - and the smarter the model, the lower the score it gave - Sonnet 4.5 Thinking rated 0.3, while Haiku and Deepseek were more forgiving, giving 0.7-0.8 (but not 1.0 still) - maybe GPT-5 as a judge glitched shortly via API?

And third time - we bumped temps back up → to 0.5 and lowered top_k to 0.95 to see if we can still score >30%. Score: 20% (huh).

OK, moving on → GLM4.6 and MiniMax M2 - did generally okay-ish, but still worse than GPT-OSS-120B. I still didn’t want to give up on MiniMax M2, so we reran code generation - bumping the temperature to 1.0 and specifying top_k - 40. Score: 33%. And with temperature 0.1 - 25%.

Nichebench - does it drive the selection?

Short answer is - no, but it gives a good hint.

Here’s what we’re looking for, in a model, a combinations of these pillars:

- Popularity - the more popular the model, the more fine-tuning, inference options, providers, QLoRA examples, etc.

- Size & Architecture - ideally there should be a family of similar models, but that’s not mandatory. For us it is important to have a smaller model to experiment on locally, and build the pipeline-around it, and then to replicate same steps on its bigger brother, potentially in the cloud. For this case families such as: GPT-OSS, QWEN (code), or even the Granite 4 - are a good fit.

- Capabilities:

- Tool-use - it should know when to use what tools, be able to select the right tools, call with right parameters, etc. - this involves serialization in JSON, and more.

- Multi-turn agentic behavior - the model should be able to do small agentic turns - i.e. make 20-30 turns autonomously. Some of these turns, obviously, will be tool-uses.

- Long contexts - the model should be able to explore the repo, collect information in its context and use it later. It should make a good use of needles it found, few hundred pages before.

- Only then, we can throw-in the Niche-domain knowledge - like Drupal.

So, what will we choose?

So far, we're still not convinced to move away from the original path: GPT-OSS-20B → GPT-OSS-120B.

But we will still run some deeper tests, definitely keeping our eyes on MiniMax M2 - it’s dev-benchmark scores are very good. It’s also pretty fresh, and not very popular yet (no Unsloth, no together.ai, etc) - MiniMax M2 might be the 3rd stepping stone, after GPT-OSS-120B. Same goes for the Granite 4 Family - one advantage Granite 4 has, that it comes with even smaller sizes for experimentation.

Now that you reached the end 😆 - please consider supporting our effort on - Ko-Fi - any donation will go straight into the GPU budget and more articles → https://ko-fi.com/nikrosergiu

And, if you want to get in-touch for some projects - check out HumanFace Tech and book a meeting.

💡 Inspired by this article?

If you found this article helpful and want to discuss your specific needs, I'd love to help! Whether you need personal guidance or are looking for professional services for your business, I'm here to assist.

Comments:

Feel free to ask any question / or share any suggestion!