QuietBee: Building a Home AI Lab for Fine-Tuning

1. 🧭 Introduction - Why We're Building QuietBee

On our HumanFace Tech journey into the niche AIs 🤖, we will definitely need a small AI home lab for fine-tuning, creating synthetic data, and inference testing. So, throughout the spring and summer of 2025, we were analyzing how we could build a powerful AI lab for under €2000.

We currently have an RTX 3060 with 12 GB of VRAM, and it was enough to try out different quantized models, either via llama-cpp or Ollama. It was also enough to do some fine-tuning on small quantized models, like we did in this article.

But for proper fine-tuning, we need way more VRAM and a separate machine that can work for a few hours or even days 📅 if needed (without blocking the main day-work desktop).

Initially, we set our eyes on being able to run, at inference, a 70B @ Q4 model like DeepSeek R1 (llama-flavor). For this model, we'd need about 48 GB (actually ~40 GB plus an okay context window) for comfortable inference. Various optimizations can be factored in, but we're talking about a ~6-7k token context window, which is a good start.

Alternatively, for inference, running a Qwen3-32B dense model with a 25k context window also fits well within 48 GB of VRAM.

Today, you can use this 🧮 ApX Calculator tool. Back then, such tools weren't available, but now you can simulate various cases before actually buying hardware.

—

Some might ask: “But why do it at all at home? Can't you just run it on a GCP or AWS instance?” ☁️ Of course, the answer is yes, but… we want to be able to do long runs, optionally test models on the spot, and the cheapest 20 GB VRAM machine that we can use continuously is Hetzner at about €200/month (it used to be ~€350 a few months ago). A machine with 48 GB of VRAM and a ton of tensor cores would cost at least ~€500/month (GCP and AWS are way more expensive, going beyond €1000/month). So, we'd rather stick to an in-house lab for what we're planning to do.

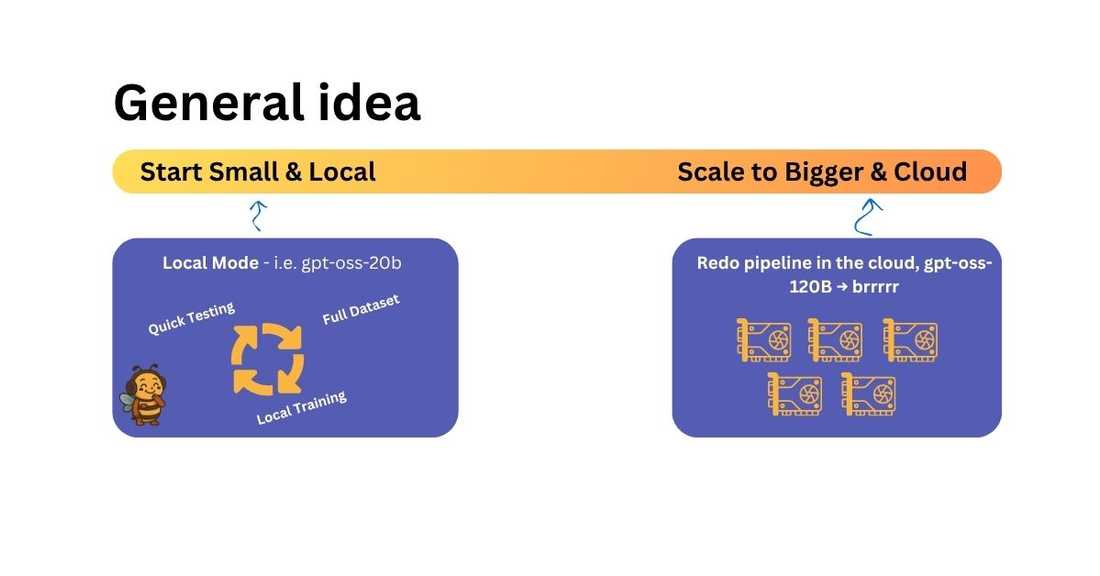

The AI lab will be a perfect place to start, but we won't be able to fine-tune very large models, think >70B parameters (or >120B). Once we get good results with our pipeline on a smaller scale, we can always rent cloud hardware and re-run the pipelines (assuming they will only run for a few hours, not days, so we can afford it).

We also set a financial limit: €2000. We don't think a small AI lab should cost more than that.

2. 🛣️ Three Hardware Paths - Finding the Best Strategy

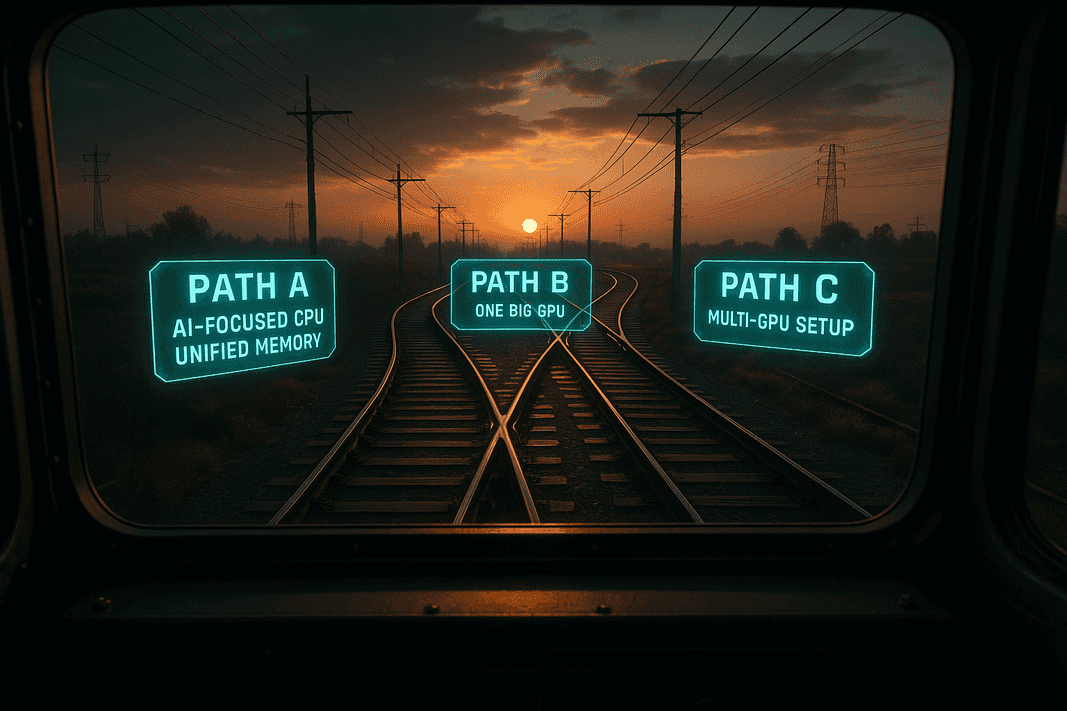

There isn't just one path you can take when setting up a home AI lab. We'll describe three main paths you could go for:

- Path A: AI-focused CPU + Unified Memory (Apple M-series or AMD Ryzen AI Max+ 395)

- Path B: One Big GPU (e.g. RTX 5090, 36 GB)

- Path C: Multi-GPU Setup (newer mid-range or older high-end cards)

Let's look at each of them.

Path A: AI-focused CPU (CPU with an NPU) and Unified Memory

This is the path most people want to take when they talk about building a home AI lab. And yes, it's relatively simple, but very expensive 💰. For example, an Apple Mac Mini with an M4 Pro CPU and 64 GB of unified memory here in Spain will set you back around €2500.

This price seems close to the €2000 limit we set ourselves, but the results won't be the same:

- Inference and fine-tuning speeds will be significantly worse (4-5x slower)

- Fine-tuning larger models (>10B) will be almost impossible

- No CUDA support

- No upgrade path or tinkering options - you're locked into the hardware you get

A few advantages are lower power usage, less heat, smaller size, and larger unified memory (but remember: it's shared between the OS, apps, and your AI experiments).

On the other side, AMD's AI Max+ 395 has a slightly better story than Apple's M-series. Reported inference speeds are a bit higher (still about 3× slower than proper GPUs). It uses ROCm under the hood, which is more compatible with native AI libraries, but the software stack is still pretty immature.

Here's a quick breakdown:

- Apple M (e.g. M4 Pro): usually gives you 20-35 tokens/second (inference, 7B @ Q4); ~7 TFLOPS

- AMD Strix Halo (Ryzen AI Max+ 395): around 50 tokens/second (inference, 7B @ Q4); ~59 TFLOPS theoretical max, ~37 TFLOPS in practice

Note: We really like the Strix Halo's potential. We've seen some AliExpress builds for about €2100 that include 128 GB of unified memory. The good part is that they're affordable and you can theoretically fine-tune larger models or use larger context windows. The downside is that it will take much longer (for example, 42 hours vs 4 hours - almost 10× slower).

Path B: One Big GPU (e.g. RTX 5090, 32 GB)

This sounds like a relatively simple option. Even though 32 GB is less than 48 GB, it might be a good starting point. However, at the time of writing, an RTX 5090 costs around €2700 → and that's just the GPU, without the rest of the system.

The specs are excellent, but the capacity is still lower than what you'd get from other approaches.

Alternatively, you could go for something like the NVIDIA RTX™ 6000 Ada Generation. The cheapest we found was about €7500 (and some refurbished units for ~€4200), but all of these options are serious overkill for a home setup.

Path C: Multi-GPU Setup (Newer Mid-Range or Older High-End Cards)

This is our preferred approach ⭐. You can also pick between several sub-options:

- 2 × RTX 3090 (24 GB each = 48 GB total)

- 3 × RTX 4060 Ti (16 GB each = 48 GB total)

- 4 × RTX 3060 (like ours, 12 GB each = 48 GB total)

- Or mix and match any good deals you can find online

This path offers flexibility 🔀, a wide range of budgets 💵, and many upgrade opportunities. Standard higher-end desktop motherboards can offer two full PCIe physical slots (16× and 8×), so they can accommodate two GPUs. However, most consumer CPUs can't provide more than 24 PCIe lanes (for example: 16 lanes for GPUs, 4 lanes for the chipset, and 4 lanes for NVMe SSDs).

There's another category of desktop systems: workstation-class 💪 (as opposed to consumer-grade). That's where you'll find CPUs like AMD Threadripper. These are usually very expensive, but you can find older, used options at affordable prices. Their main advantage is that they're designed to handle far more PCIe lanes - typically 48-64 lanes (vs 24), which is exactly what's needed to power more than two GPUs.

3. 🐝 QuietBee's Core - Workstation-Grade at Consumer-Grade Prices

After some analysis and comparison of all the possible paths, we chose Path C → the multi-GPU setup as the best option for our needs.

After a quick look at the market, we saw that there were some solid second-hand GPU deals. For example, we could get an RTX 3090 with 24 GB of VRAM (used, of course) for around €600.

The workstation-grade desktop parts market was also quite promising. A used motherboard that can handle four GPUs was selling for about €200-€300 (more or less), and CPUs were going for roughly the same price.

.png)

HEDTs (High-End Desktop platforms) come in a few main types:

- AMD Threadripper: You can find 1st gen parts for very low prices. Even the motherboards are often in the €100-€150 range, and CPUs are similarly affordable.

- AMD Epyc: Codenames “Rome,” “Milan,” or “Genoa.” These are more expensive, but they support a huge number of PCIe lanes and memory. They're a very solid alternative if you can find used parts at a good price, although they're usually designed for server racks rather than desktop builds.

- Intel HEDTs: Core X (Skylake-X, etc.). You'd need to target something like the i9-7980XE, which runs on an X299 (or older X99) motherboard. It delivers strong performance (~44 PCIe 3.0 lanes), but it's not guaranteed to support three or more GPUs, so you need to check the details.

We settled on the Threadripper platform 🧵⭐ and targeted 1st gen because it was cheap, widely available, and we only needed it to act as a “host” for our GPUs - nothing more.

Price-wise, here's a quick comparison:

.png)

4. ⚔️ GPU Face-Off - Choosing the Brains of QuietBee

There are 2 concrete ways (best so-far, price-wise):

| Setup | VRAM | Cost per Card | Cost Total |

|---|---|---|---|

| 2 × RTX 3090 | 48 GB total | ~€600 used | ~€1,200 (used) |

| 3 × RTX 5060 Ti | 48 GB total | ~€450 new | ~€1,400 (new) |

We made a deep analysis and so far we settled on 3 x RTX 5060 Ti's - here's why:

.png)

As you can see - there are many reasons why we are thinking to go with 3 x 5060 Ti's:

- New - means we can use that as company expense, we get warranty, greater resale value, etc

- Smaller form-factor - means we can fit 3 of these comfortably (but not all 4)

- They run cooler - means we (hopefully) won't overheat the case and will require a bit less power

- They have newer cores - in some cases (especially in quantized ones) inference will be faster, and fine-tuning as well.

5. 🛠️ Building QuietBee - Process and Components

We used two platforms for “shopping,” but eventually settled on Wallapop, a Spanish second-hand and reselling marketplace (the other tempting one was spanish Ebay).

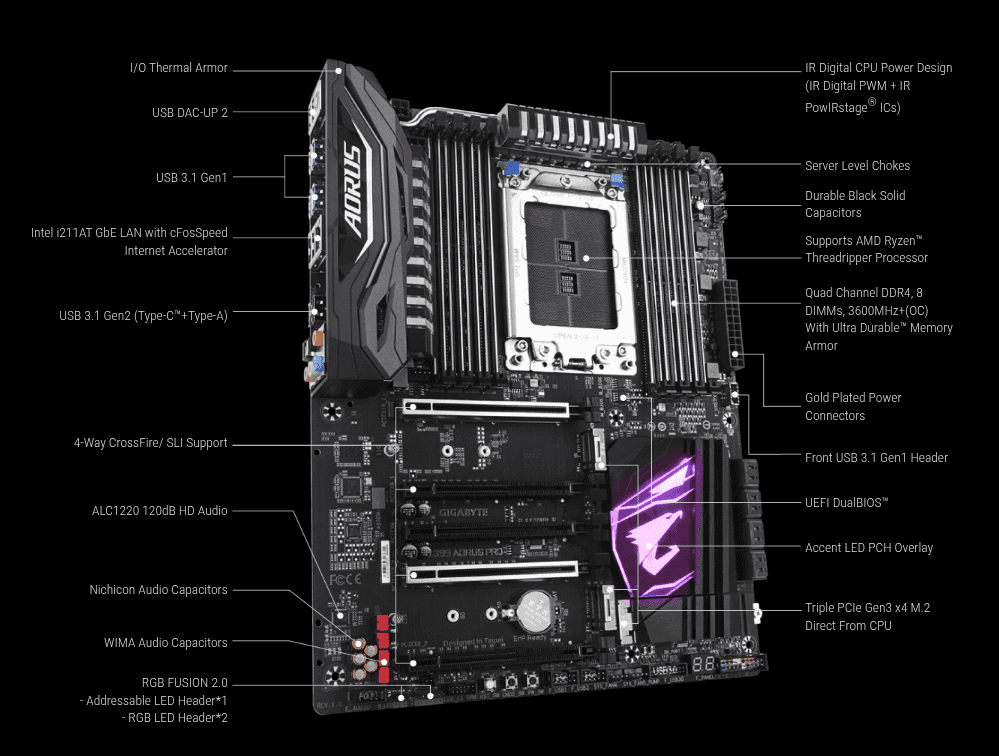

We 🎯 targeted bundles, and we found an amazing deal: €434 (€399 + insurance and delivery) for an AMD Ryzen Threadripper 1950X, X399 Aorus Pro (motherboard), and a Gigabyte RTX 3060.

.png)

Why did we pick this bundle with an RTX 3060? Normally, a good used RTX 3060 with 12 GB of VRAM goes for around €200. I wanted to upgrade my wife's desktop anyway, so this meant we'd effectively get the Threadripper 1950X and X399 for just €230 in total — which is a very good deal 🤑.

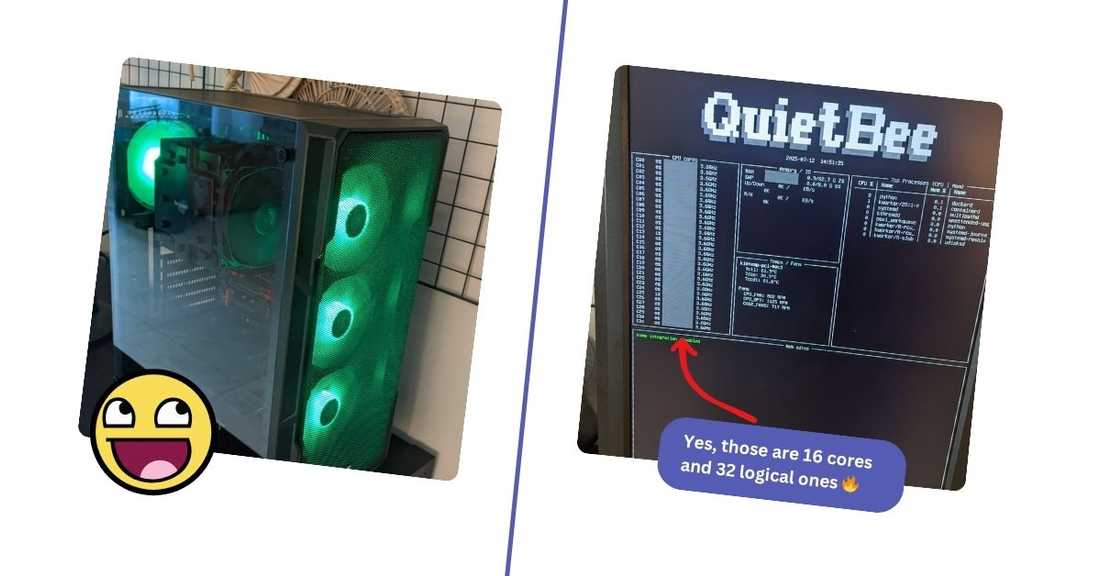

We were extremely happy when they arrived — well packaged and well preserved!

.jpg)

Then, we needed something to cool this monster, after a bit of digging I landed on this:

.jpg)

For cooling, we picked the be quiet! Dark Rock Pro TR4 for €39. It's not the most powerful cooling system, but it was affordable for the price. Also, we're not planning to run inference on the CPU, and be quiet! claimed to actually be… quiet 😉

That's where our AI home lab got its name → QuietBee! (or QB! 🐝)

Next, we needed a small and simple GPU - not for AI, but just for display output as a temporary solution. We found an Nvidia GT 710 (2 GB) for about €10.

The rest we bought new from PcComponentes:

- Tempest Garrison ATX Case - €52

- 64 GB RAM - 4 × 16 GB Forgeon DDR4 3200 MHz, ~€100 for both sets

We also reused an old SSD and an old PSU to power everything.

A bit later, we added another 32 GB of RAM - Crucial Ballistix DDR4 3200 CL16 for about €52 (used, via Wallapop). We chose this kit because we plan to eventually buy another identical set, and these are popular and cheap enough.

✅ QuietBee Core Build (~€483, excl. GPUs):

- 🧵 Threadripper 1950X + X399 Aorus Pro - €230 (bundle, excl. 3060)

- ❄️ be quiet! Dark Rock Pro TR4 cooler - €39

- 🧠 96 GB DDR4 RAM (64 GB Forgeon + 32 GB Crucial) - €152

- 🖥️ GT 710 (2 GB) display GPU - €10

- 🏠 Tempest Garrison ATX case - €52

- 🔋 PSU (500 W, reused) - €0 (this will need an upgrade!)

- 💽 SSD (reused) - €0

So, let's re-check the budget. We still have around €1500 left to spend, and we still need to buy three GPUs and a PSU (capable of powering everything - and those aren't cheap either). We probably won't stay exactly under the €2000 mark → because:

- 3 × Palit GeForce RTX 5060 Ti are about €490 each → ~€1470 total

- That leaves us with roughly €50 for a PSU, while a good one costs around €100-150

This means the final budget will be roughly €2100. But because we're buying the GPUs new, we can treat them as a company expense. Also, VAT plays a part here, so if you're tax-savvy, you're actually staying slightly below the €2000 mark.

The X399 is a beast. It allows for up to four GPUs (two at 8× and two at 16× lanes) and has plenty of room for RAM and SSDs if needed - so we won't be locked into anything.

Even if we decide to upgrade the setup to a newer CPU and motherboard - for example, if we wanted to move to a newer Threadripper platform or a bigger board to really accommodate four large GPUs - we'd only need to spend around €300 more. We could sell our current parts for about €200-250 and buy the new ones for about €500-600. But jumping to 3rd-gen Threadripper can be very expensive 🙂

6. 🧪 Results and Future Plans - Next Steps

So here we are, we're just at step 1 out of 4:

- ✅ Get the base for the AI Lab- DONE

- 🌱 Buy 1st GPU

- 🌱 Buy 2nd GPU + Enhance Cooling

- 🌱 Buy 3rd GPU

We've navigated the hot-waters 🌊 of the AI shovel-market and got ourselves a sweet path to walk on. 🐝 QuietBee is ready and humming away on its CPU, awaiting for more GPU upgrades. For now - we're just using the current setup to run models on our CPU (I mean, we're having 32 logical cores, why not) - but we're building up towards buying GPUs in the following weeks.

What's the end-goal? We're aiming to get a SOTA level framework specific (think: Drupal, Wordpress, Laravel, Svelte with Runes) affordable models. Models that would be as good as Claude Sonnet (or better) but at a price-tag of Haiku 3.5 or lower.

🗺️ How Do We Get There?

- ✅ Try out a small fine-tuning experiment → Done

- ✅ Create a testing framework for niche domains (e.g. Drupal 11) → Done

- ✅ Create the base for our AI lab → Done

- 🔧 Define a process for distilling data for fine-tuning, reusable across frameworks → In progress

- 🧪 Fine-tune a super small base model and retest it to see if we can enhance a model's niche knowledge (e.g. Drupal)

- 🛠️ Enhance our home AI lab

- 🚀 Fine-tune a proper model focused on Drupal, test it, and release it as open weights

- 🌱 …and more to come!

If you want to be part of the journey or help us make it real, please consider supporting the effort via my Ko-Fi page.

💡 Inspired by this article?

If you found this article helpful and want to discuss your specific needs, I'd love to help! Whether you need personal guidance or are looking for professional services for your business, I'm here to assist.

Comments:

Feel free to ask any question / or share any suggestion!