Boosting SEO with AI: Enhancing Images

Do you have a sizable website with hundreds of images lacking SEO-friendly alt/title attributes? Discover how AI can remedy this. 🌐

The Importance of Image Alt/Titles in SEO

When it comes to websites with images, having alt and title attributes in HTML isn't just good practice – it's essential. There are numerous articles explaining why, like HubSpot’s Article on Image SEO and Semrush’s Article on Image SEO.

If you have just a few images, you can (should?) just edit those manually and specify those attributes. But what about platforms hosting hundreds? Or those with user-contributed images? Usually we try to simplify image uploads for users and we remove the extra text-fields - so the images end up without any attributes. Here’s where AI becomes your ally. 💡

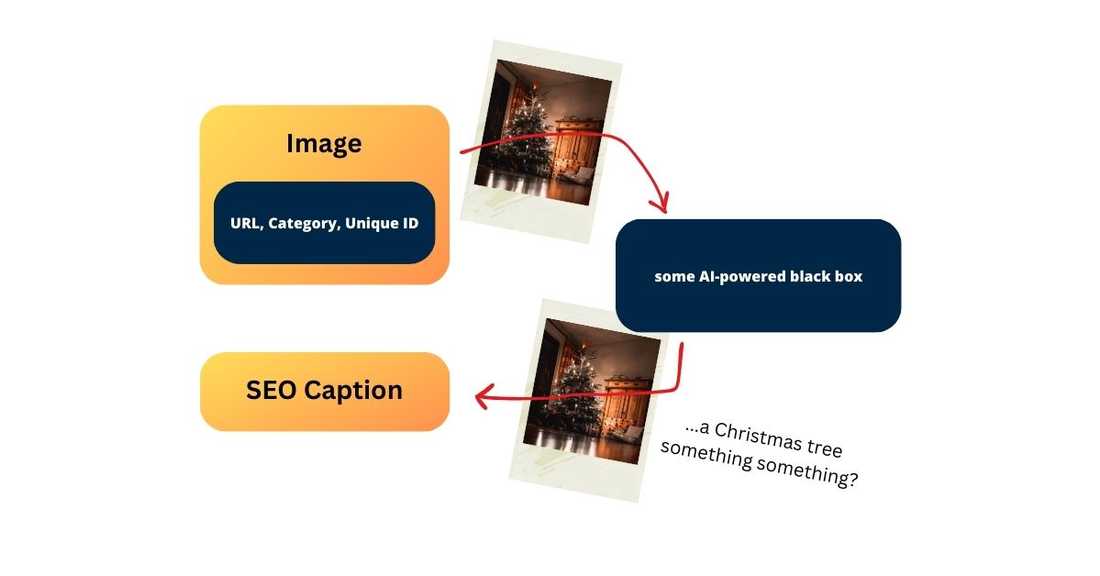

The AI Solution: Image-to-Text Models and LangChain

Our setup's centerpiece is the Image-to-Text Salesforce Model (there are also other alternative models available on Hugging Face). This model interprets image contents into text descriptions. It works pretty well with various sizes and image qualities - like some sort of black magic!

Alright, that’s a good start, but we can enhance it further. We'll employ an AI (LLM) to refine these descriptions into SEO-optimized captions, using specific prompts and context. 🚀

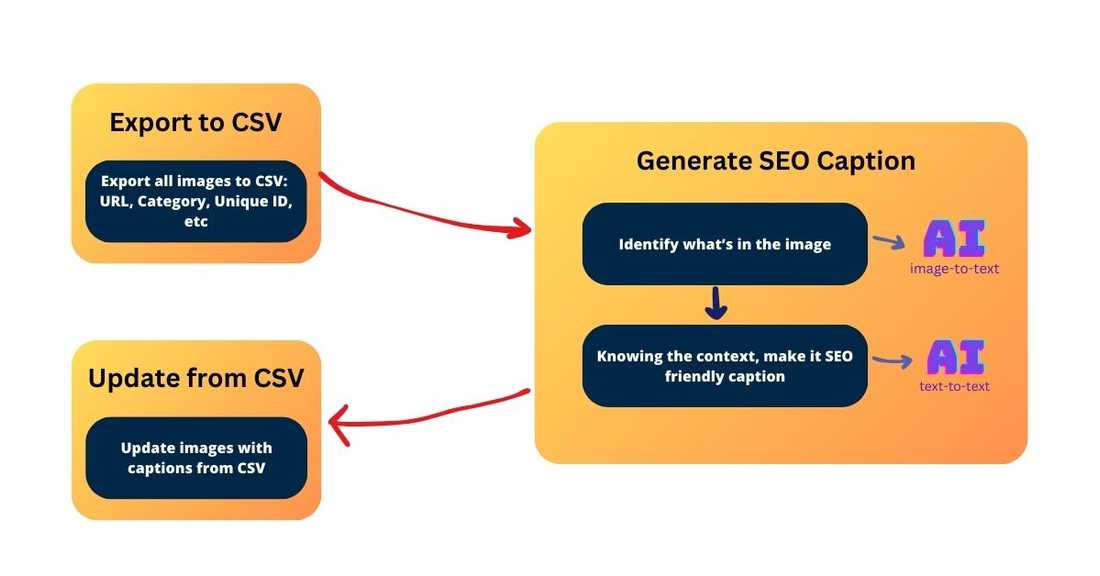

Data Extraction and Pipeline Setup

Before diving into the pipeline, we need to extract data, depending on your website's backend. It could range from SQL queries to PHP functions for CSV output. Categorizing images (like user contributions or article covers) helps tailor AI context, so when you export those, make sure to have a column that specifies where that image comes from - a category (like: user contributed image, or, article cover image).

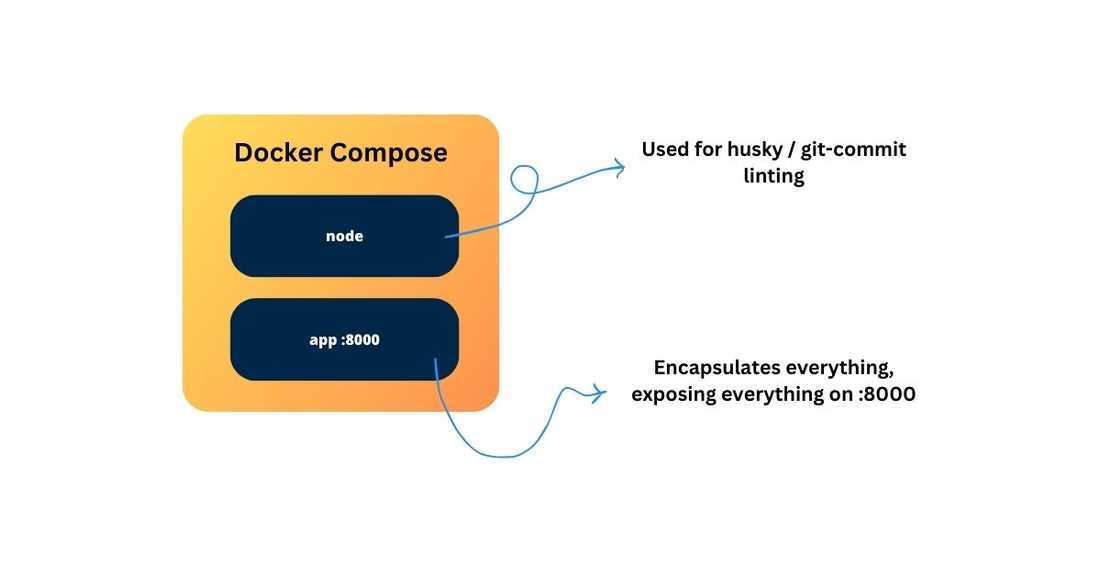

With a consistent CSV export method, let's look at the pipeline. I've used a docker compose template for a containerized setup, ideal for future microservice deployment and CI/CD integration. 🐳

Development Tips:

- For local development, if you want to install all dependencies on the host system, use: uvicorn main:app --reload - to start the FastAPI.

- For dependency-free local development: utilize Docker's virtual environment - you can reuse it on your host (assuming you’re having the same python version). Here, you’ll have to make sure that the python’s paths on the host system match the same paths on the containerized system. In my case, I had to create a symlink (on the host): /usr/local/bin/python3.10

- If using CUDA locally, map your current CUDA version volumes to save resources.

FastAPI and LangChain Integration

FastAPI serves as our microservice foundation, complemented by LangChain for LLM flexibility beyond OpenAI models (you can later pair it with any other LLM, even open source ones). This combination allows us to craft a powerful, scalable solution. 🛠️

Essential Packages (pyproject.toml - using Poetry):

Docker Compose and Dockerfile:

✅ Docker-compose.yml:

✅ Dockerfile:

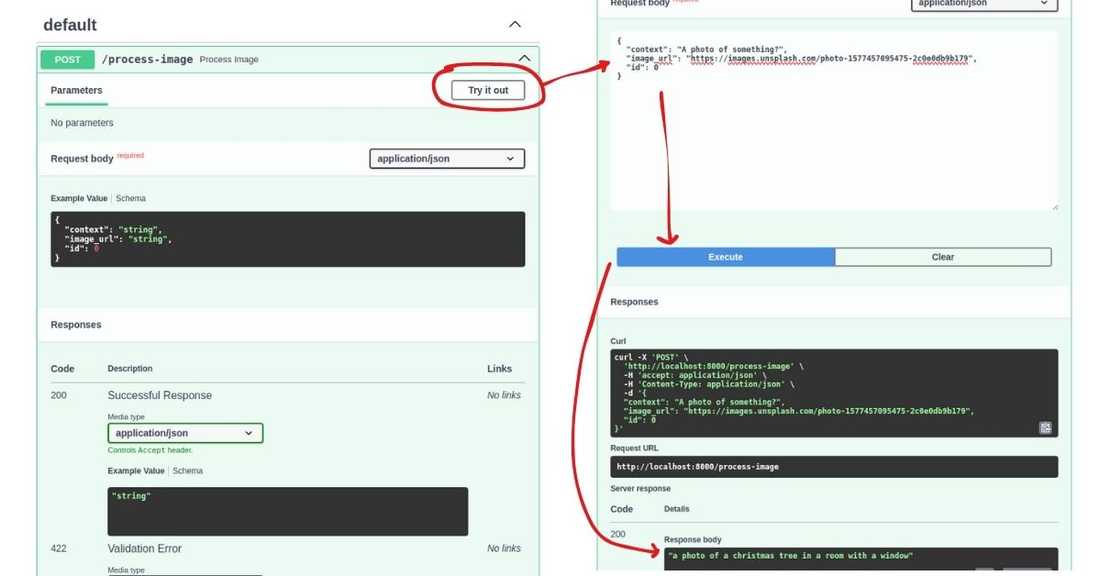

Run `docker compose up` and test the setup at: http://localhost:8000/docs

Crafting the Caption: Generating Alt/Title Tags

With the image recognition part up and running, let's explore LangChain to refine our captions. Using ChatGPT3.5, we can perfect our SEO-friendly captions with this prompt template:

✅ Prompt Template:

"""

You are an advanced AI specializing in creating SEO-optimized image captions for use as alt text or title text. Your captions must follow SEO best practices, focusing on concise, descriptive language that includes relevant keywords. You will be given a context describing where the image was used or uploaded and an initial caption generated by an image captioning tool. It is your job to refine this caption to make it more relevant and SEO-friendly, correcting any contextual mistakes made by the initial tool.

Context: {context}

Initial Image Caption: {caption}

Refined SEO-optimized Image Caption:

"""

Updated to work seamlessly with LangChain, our snippet now looks like this:

✅ LangChain Snippet (same function, but this time with LangChain):

Test it out on our setup page and see the magic happen! ✨

New reply is:

Implementing the API for Real-world Use

Our API is live and ready at https://localhost:8000. Let’s put it to work with a Python script that updates captions in our CSV, one by one. I suggest starting with a small batch of 10-20 images to ensure everything is running smoothly.

Here’s an example of the script.

Example Python Script (with LangChain):

If adjustments are needed, tweak the main prompt or contexts for each image category until you're satisfied with the outcomes. 🔄

The Final Step: Updating Your System

After perfecting your captions, it's time for the final act – updating your website's images with these new, AI-generated alt/title tags. Run an update script within your system to apply these changes, ensuring your images are now SEO-friendly and more accessible. 🌍

Embracing AI in SEO and Web Development

The world of open-source models offers a plethora of solutions for tasks you'd think were impossible, and in record time too. Hugging Face is an excellent resource to explore these models. Using LangChain combined with LLMs creates a solid, efficient workflow. Plus, containerizing your setup as a microservice using templates not only accelerates development but also keeps things organized and scalable. 🚀

Other things to consider to enhance in the process (not covered in this article):

- Consider using the same pipeline to rename the images (from 123.png to something like christmas-tree.png, also make sure you don't have duplicates), suggested by Andrian Valeanu;

- Consider using a length limit for those captions - suggested by Andrian Valeanu as well;

- You can also rewrite the images (a bit more complex) - so that they incorporate the captions and authoring (maybe?) as metadata of the image - embedded straight into the image - however this requires you to re-save all the images - also suggested by Andrian Valeanu 😉

I'm committed to creating more articles about how developers and entrepreneurs like you can leverage these Open Source models in practical, hands-on scenarios. If you find these articles useful, consider supporting my work on Patreon. Every bit of support is greatly appreciated! 💖

💡 Inspired by this article?

If you found this article helpful and want to discuss your specific needs, I'd love to help! Whether you need personal guidance or are looking for professional services for your business, I'm here to assist.

Comments:

Feel free to ask any question / or share any suggestion!