Whisper Voice to Code

Let’s talk about productivity 📈 - I use AI pretty frequently (duh, shocker! 😊). In VSCode, Github Copilot works pretty well, but still from a case to case, after it does a bunch of suggestions and alterations - some things still aren’t as perfect as I’d expect. I find myself constantly explaining to AI simple things:

- “don’t forget to use Drupal 10’s best practices…”

- “where are the dependencies? Hello! Dependency injections!”

- “It seems you forgot to add that interface to the newly created service…”

Or, outside of the VSCode, when I’m just brainstorming some ideas with ChatGPT or Claude, I find myself typing and typing and typing, essays of pages, running prompts, back and forward.

Typing takes too much time - I tend to gravitate to AI Mic-input built-in the OpenAI Mobile App (because they use Whisper). So, I found myself starting a conversation on the 📱phone app, and then continuing it via the 🖥️ web (desktop) - and I really liked it. When it was about more nuanced things - I’d use typing, but when I want to dive into deep explanations and I want to output pages of content to AI, voice would be my go-to tool.

What’s the alternative?

- Using Chrome’s Speech-to-text - results are very inaccurate - especially when we’re talking about abbreviations, geeky terms, programming.

- Using ChatGPT’s “Voice Mode” on the web - I don’t want to “talk” to the AI, I want someone just to transcribe my ideas, maybe afterwards edit them, polish a bit - and then send them out to AI.

- Using ChatGPT’s android’s App 📱- sure, but then I can’t paste it in VSCode, Slack, Jira, Emails or other tools - also for some reason they only allow lengths of ~2-3 minutes to be transcribed (it used to be >5 minutes). I want to have no limits.

Huh, this brings me here 👇

The general idea

Idea was - take an existing self-hosted web-service, built around Whisper, and extend it with a small UI / Interface that would do exactly what I needed.

My target was: https://github.com/ahmetoner/whisper-asr-webservice

My fork 🔀 became: https://github.com/HumanFace-Tech/whisper-asr-with-ui

Here’s the result, with an example:

The goal? 🎯 Say it once, paste it anywhere - VSCode, Slack, Jira, emails - without having to fiddle around.

Below I’ll get into details of how it works, and how you can install and customize it for your own needs.

How it works & what it is?

Let’s first analyze where we want to get:

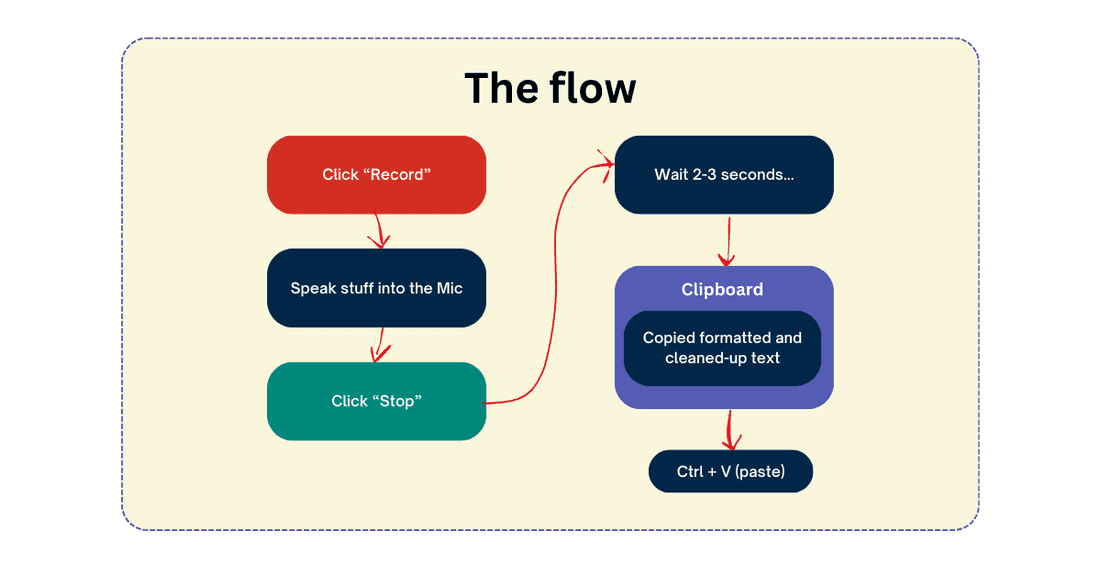

So, our steps are:

- Click Record 🔴

- Speak stuff into the MIC 🎙️

- Click Stop ✋

- Wait 2-3 seconds ⏳

- Paste anywhere pre-formatted and polished text. 🎯

I used https://github.com/ahmetoner/whisper-asr-webservice - this is a neat little web-service wrapper around whisper. It has various engines and models to choose from. I did use it ~1 year ago in the OrangePi AI Assistant project.

So, I forked it, and adjusted a few bits here and there. Final project is - https://github.com/HumanFace-Tech/whisper-asr-with-ui

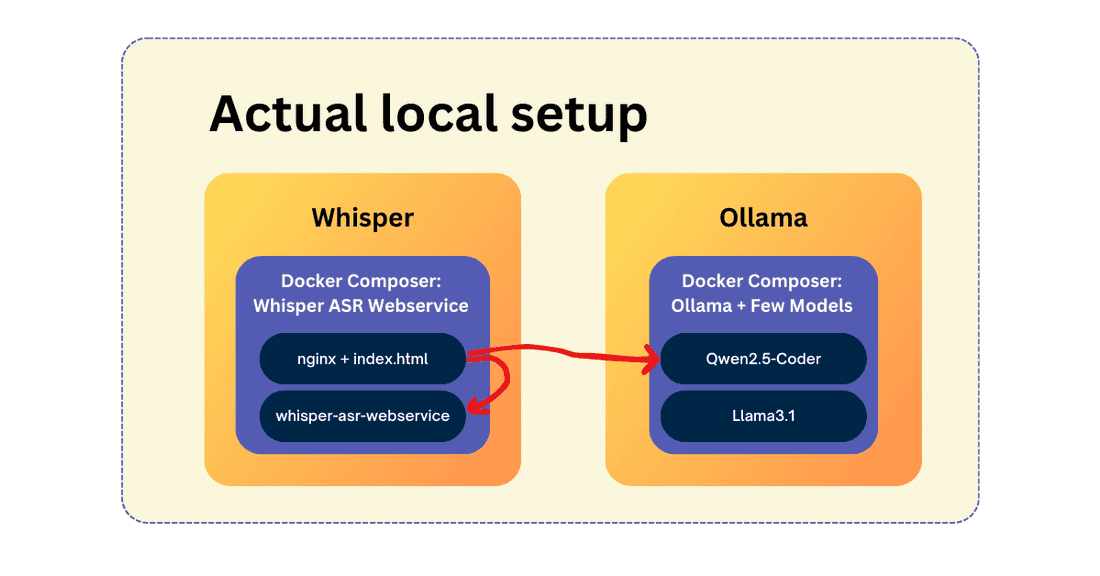

Most of my adjustments are explained in the README file of the project - and also steps on how to run it, but here’s TLDR: I created index.html and modified docker-compose.yml - in the end I use a small nginx (I know, overkill! but hey, it’s fast to configure, saves time and works well 😉) to feed my index.html on a specific port.

–

For the setup to work, I’m also using Ollama, but you can use anything you’re comfortable with. Ollama turns raw transcriptions into polished prose with one smart prompt - transforming your messy thoughts into professional-looking text in seconds (like in the demo above). It even auto-corrects stuff. If you don’t have a local GPU - I highly recommend using https://groq.com/ - as it’s blazing fast and powerful enough for the task.

If you want to alter the endpoint or pick a different model, just alter the HTML here:

https://github.com/HumanFace-Tech/whisper-asr-with-ui/blob/315ee7b325970f3240c49f3464aaa7f52b8f26f4/index.html#L332

Picking the right model for the job can be tricky - every time you’ll change the model (env. variable in the docker-compose) - you’ll need to down/up everything.

I know, it’s pretty simple, not sexy - but I’m just sharing a tool I’m personally using daily 😀 but check-out next steps 👇

Next steps…

~1 year ago I started working on a project called Whisper-All - it’s a simple tray-based omni-present app, running on Windows / Linux / MacOS (because it’s an Electron App) - that is the same concept as this thing, but it’s configurable, will use keyboard shortcut combinations to capture audio anywhere and copy to clipboard.

It’s also open-source - I just need to find time to finish it. With that App - you can just paste your API keys from Groq, or run things locally (for privacy reasons) and increase your productivity.

Assuming agents will come along soon, would be a neat thing to have.

Let’s keep in touch 😉

💡 Inspired by this article?

If you found this article helpful and want to discuss your specific needs, I'd love to help! Whether you need personal guidance or are looking for professional services for your business, I'm here to assist.

Comments:

Feel free to ask any question / or share any suggestion!